New publications

Cardiologists have trained a large AI model to assess heart structure and function

Last reviewed: 02.07.2025

All iLive content is medically reviewed or fact checked to ensure as much factual accuracy as possible.

We have strict sourcing guidelines and only link to reputable media sites, academic research institutions and, whenever possible, medically peer reviewed studies. Note that the numbers in parentheses ([1], [2], etc.) are clickable links to these studies.

If you feel that any of our content is inaccurate, out-of-date, or otherwise questionable, please select it and press Ctrl + Enter.

Artificial intelligence experts at Cedars-Sinai and the Smidt Heart Institute created a dataset of more than 1 million echocardiograms (video ultrasounds of the heart) and their corresponding clinical interpretations. Using this database, they developed EchoCLIP, a powerful machine learning algorithm that can “interpret” echocardiogram images and assess key metrics.

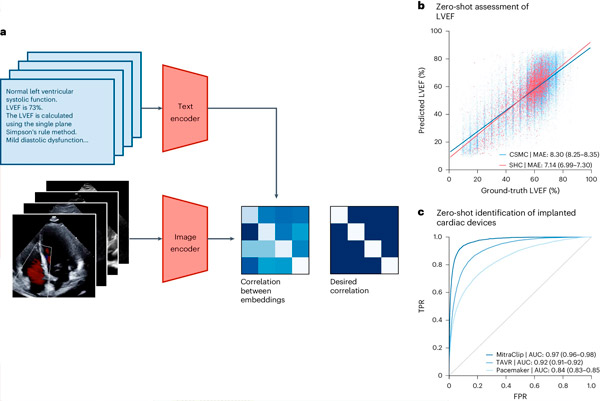

The design and evaluation of EchoCLIP, described in a paper published in Nature Medicine, suggest that interpreting a patient's echocardiogram using EchoCLIP provides specialist-level clinical assessments, including assessment of cardiac function, results of past surgeries and implanted devices, and may help physicians identify patients in need of treatment.

The EchoCLIP base model can also identify the same patient across multiple videos, studies, and time points, and recognize clinically important changes in the patient's heart.

"To our knowledge, this is the largest model trained on echocardiography images," said lead study author David Ouyang, MD, a faculty member in the Division of Cardiology at the Smidt Heart Institute and the Division of Artificial Intelligence in Medicine.

"Many previous AI models for echocardiograms are trained on only tens of thousands of examples. In contrast, EchoCLIP's uniquely high performance in image interpretation is the result of training on nearly ten times more data than existing models."

"Our results show that large datasets of medical imaging and expert-verified interpretations can serve as the basis for training basic medical models, which are a form of generative artificial intelligence," Ouyang added.

EchoCLIP workflow. Source: Nature Medicine (2024). DOI: 10.1038/s41591-024-02959-y

He noted that this advanced baseline model could soon help cardiologists evaluate echocardiograms by generating estimates of cardiac measurements, identifying changes over time and common diseases.

The research team created a dataset of 1,032,975 cardiac ultrasound videos and corresponding expert interpretations to develop EchoCLIP. Key findings from the study include:

- EchoCLIP demonstrated high performance in assessing cardiac function from cardiac images.

- The baseline model was able to identify implanted intracardiac devices such as pacemakers, mitral valve implants, and aortic valve implants from echocardiogram images.

- EchoCLIP accurately identified unique patients across studies, detected clinically important changes such as previous cardiac surgery, and enabled the development of preliminary text interpretations of echocardiogram images.

"Basic models are one of the newest areas in generative AI, but most models do not have enough medical data to be useful in healthcare," said Christina M. Albert, MD, MPH, chair of the Division of Cardiology at the Smidt Heart Institute.

Albert, who was not involved in the study, added: "This new baseline model integrates computer vision for echocardiogram image interpretation with natural language processing to enhance cardiologists' interpretations."